GPU Compute

Access the Industry’s Highest Performance GPUs

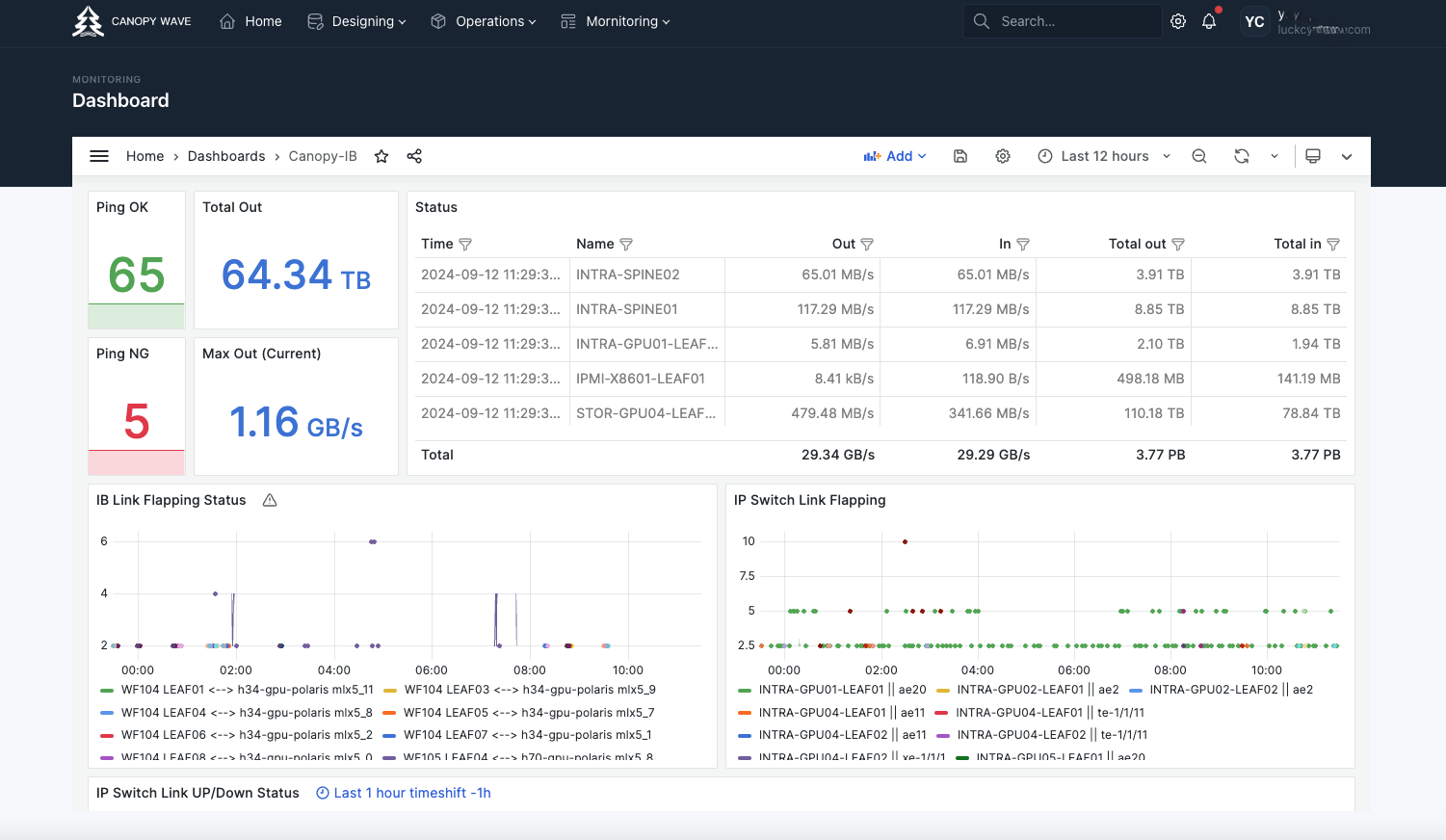

Canopy Wave offers the top-of-line Nvidia GPUs, specifically designed for various large-scale AI training and inference models. Highly configurable and highly available.

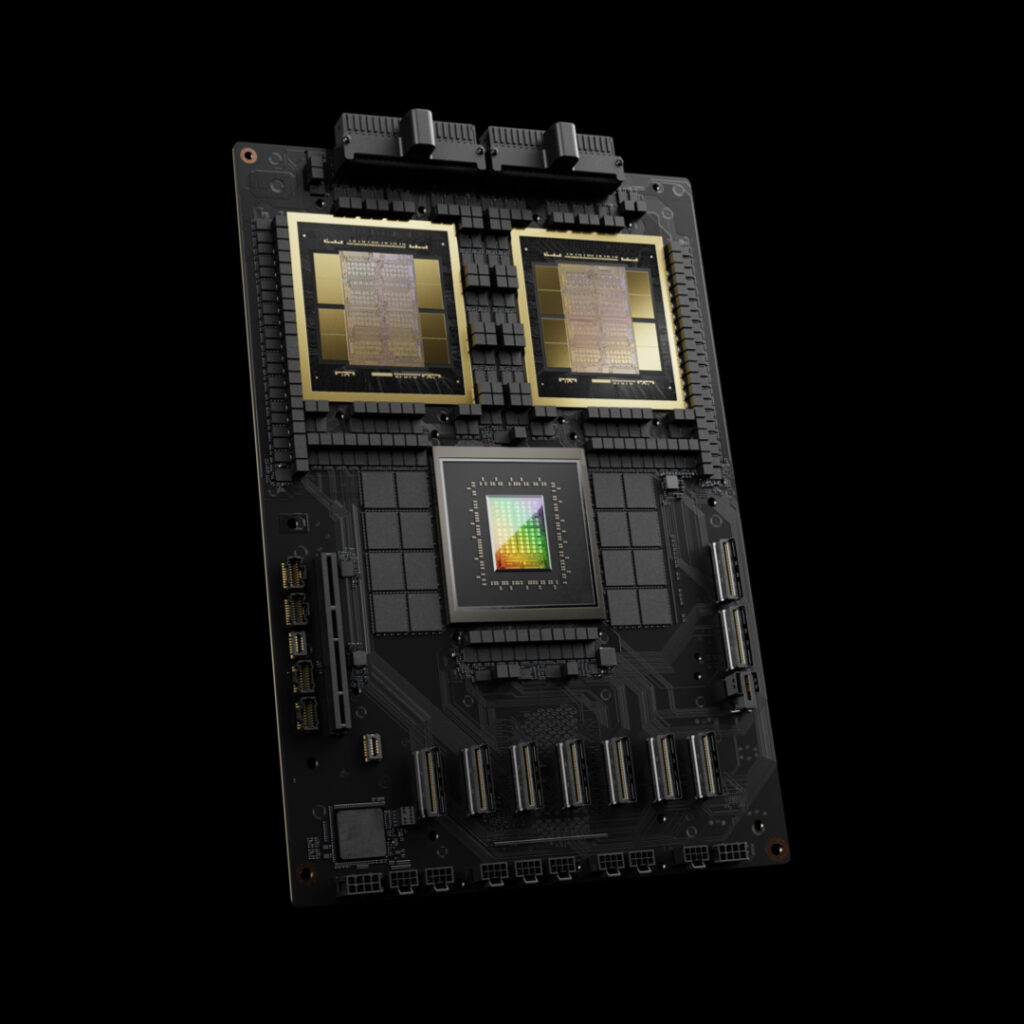

NVIDIA B200 GPU is based on the latest Blackwell architecture with 180GB of HBM3e memory at 8TB/s. It can achieve up to 15X faster real-time inference performance massive models like GPT-MoE-1.8T and up to 3X faster training for LLMs compared to the NVIDIA Hopper generation compared to the Hopper generation.

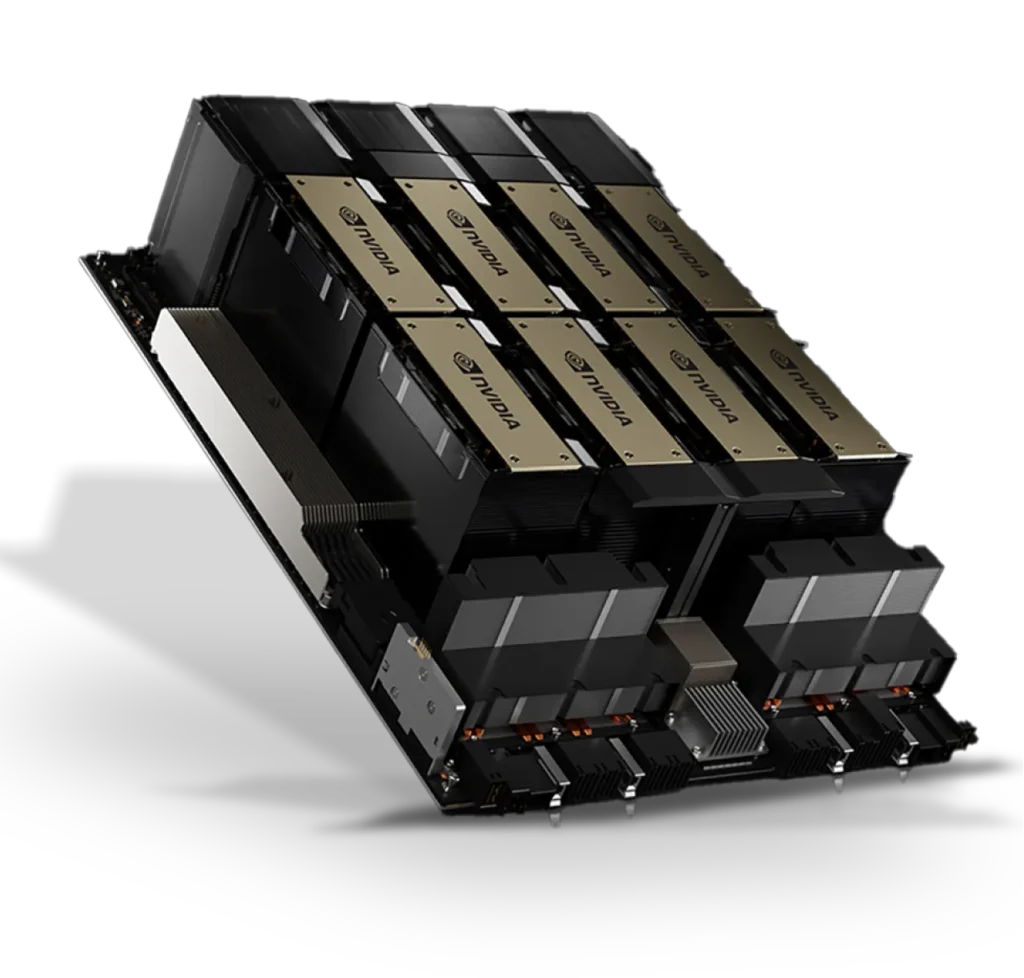

NVIDIA H200 is the first GPU to offer 141GB of HBM3e memory at 4.8TB/s, that’s nearly double the capacity of the NVIDIA H100 GPU with 1.4X more memory bandwidth. The H200’s larger and faster memory accelerates generative AI and LLMs, while advancing scientific computing for HPC workloads with better energy efficiency and lower TCO

The NVIDIA H100 Tensor Core GPU delivers exceptional performance, scalability, and security for every workload. The H100 GPUs can offer 7x better efficiency in high-performance computing (HPC) applications, up to 9x faster AI training on the largest models and up to 30x faster AI inference than the NVIDIA HGX A100

Talk to our Experts

Contact us to learn more about our GPU compute cloud solutions for you.