Qwen3.5-397B-A17B

Now Available

Native Multimodal Enterprise Al

GLM-5 Launches on

Canopy Wave

SOTA open-source coding power for long-horizon

agentic tasks and complex engineering systems.

MiniMax M2.5 Launches

on Canopy Wave

Designed for high-throughput, low-latency production

environments.

Kimi K2.5 is live on

Canopy Wave

An open-source native multimodal agentic model

built for long-horizon reasoning and execution.

Qwen3.5-397B- A17B Now Available

GLM-5 Launches on Canopy Wave

MiniMax M2.5

Launches on Canopy Wave

Kimi K2.5

is live on Canopy Wave

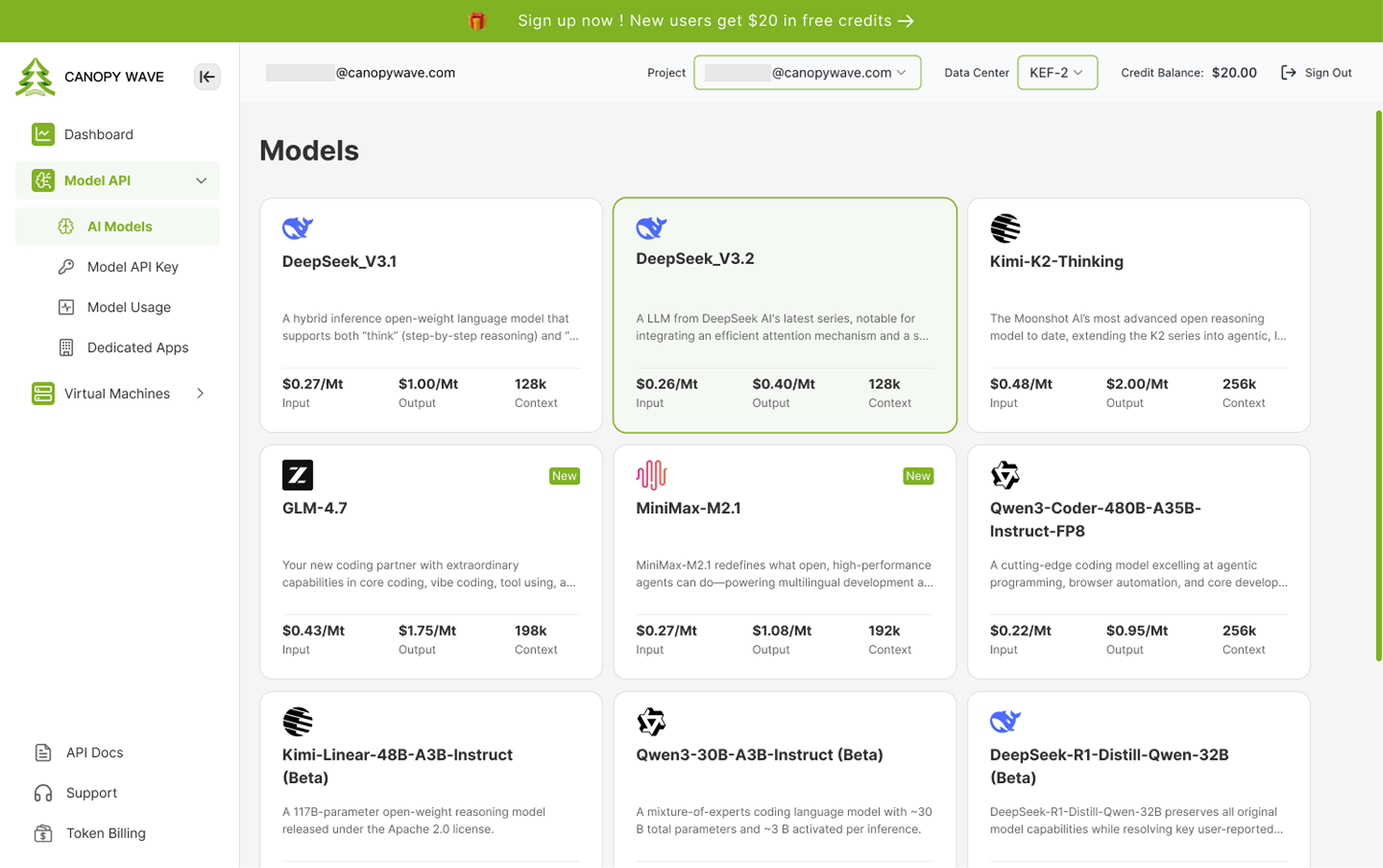

Model Library

We provide advanced, secure, and fast open models. You can enjoy a quick experience via chat, or access them easily through API integration.

Instantly allocated GPU resource and ready-to-go AI resource

End-to-End Secure Operations

Our proprietary GPU management platform offers real-time monitoring, health alerts, and resource optimization. Backed by 24/7 support, we ensure peak cluster performance and stability.

Customized Service

We provide dedicated AI infrastructure and offer full-lifecycle AI services—such as model fine-tuning and agent customization tailored to your needs—to drive enterprises toward faster, smarter, and more cost-effective growth.

Canopy Wave Private Cloud

Best GPU cluster performance in the industry. With 99.99% up-time. Have all your GPUs under the same datacenter, your workload and privacy are protected.

Pay for What You Use

Only pay wholesale prices for the AI-related resources you actually consume. No hidden fees.

NVIDIA GB200 & B200, H100, H200 GPUs now available

NVIDIA GB200 NVL72

$9/GPU/hr

- • 72 Grace CPU + 72 Blackwell GPUs

- • 20.48 TB/s GPU-to-GPU interconnect

- • 8 HBM3e 192GB per GPU

- • 1.8TB/s memory bandwidth per GPU

- • Up to 144 MIGs @ 12GB each

Providing secure and efficient solutions for different use cases

01

Serverless Inference

Learn More

02

Dedicated Endpoint

03

AI Model Training

04

GB200 Cluster with RoCEv2 Network Solution

01

Serverless Inference

- Our Inferencing as a Service (InfaaS) achieves AI Inference with Canopy Wave API.

02

Dedicated Endpoint

- Run real-time inference workloads securely and efficiently using cloud based GPU instances.

03

AI Model Training

- Accelerate AI training with powerful computing power and low-latency networks. Applied in NLP, computer vision, recommendations, and autonomous driving.

04

GB200 Cluster with RoCEv2 Network Solution

- A turnkey GB200 supercluster engineered for 24/7 production, featuring self-managing and self-monitoring capabilities.

Powered By Our Global Network

Our data centers are powered by canopywave global, carrier-grade network — empowering you to reach millions of users around the globe faster than ever before, with the security and reliability only found in proprietary networks.

Explore Canopy Wave

Trust, A Core Requirement of AI

We build our values around Open, High-Quality, and Trust, by James Liao,Founder and CTO

The Rise of Enterprise AI: Trends in Inferencing and GPU Resource Planning

AI Agent Summit Keynote by James Liao @Canopy Wave

Accelerating Protein Engineering with Canopy Wave's GPUaaS

Foundry BioSciences Case Study

How to Run the GPT-OSS Locally on a Canopy Wave VM

Step-by-step guide for local deployment

Canopy Wave GPU Cluster Hardware Product Portfolio

This portfolio outlines modular hardware components and recommended configurations