On-Demand

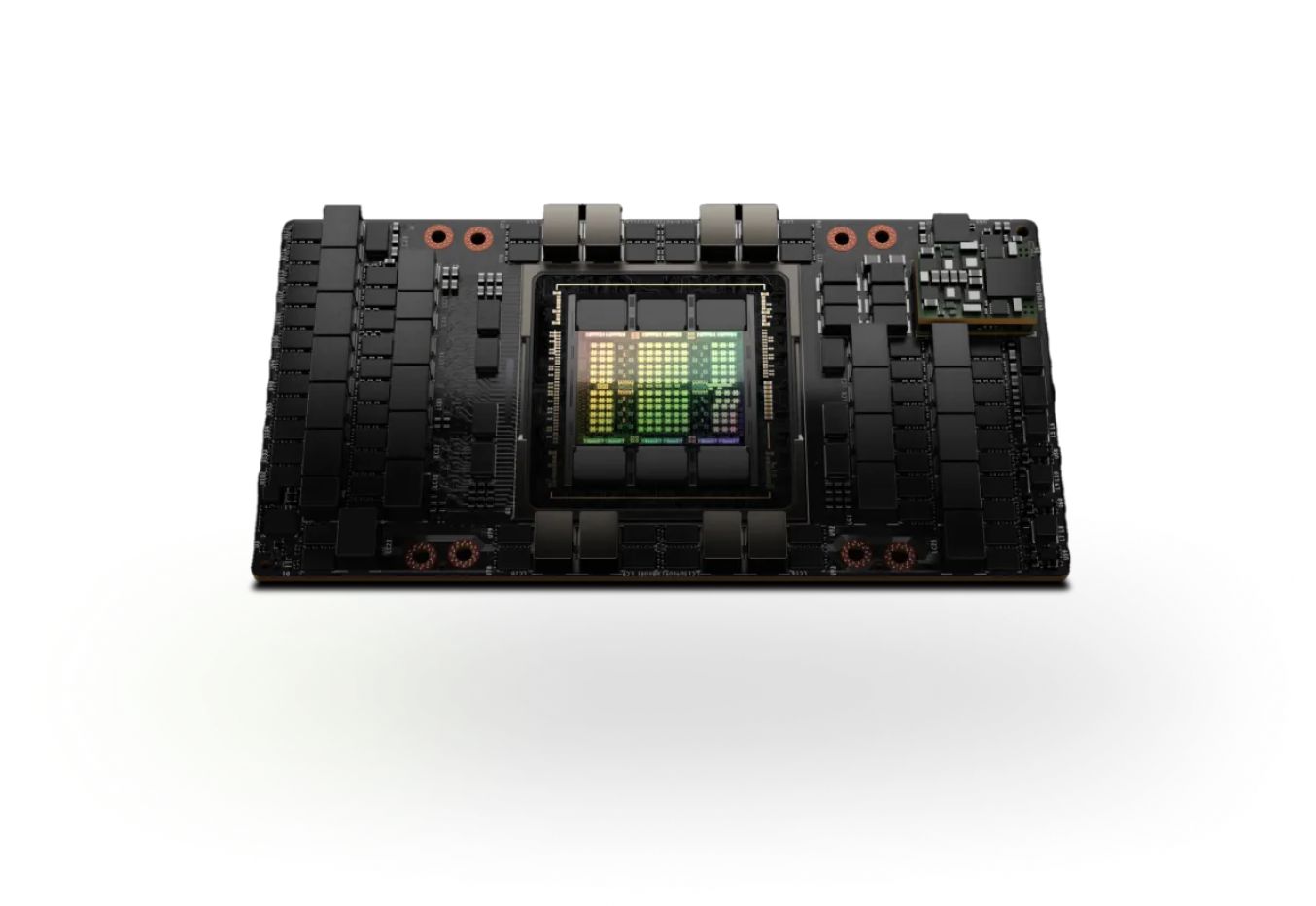

NVIDIA HGX H100

Enterprise H100 GPU instances, on-demand and optimized for training, inference and HPC.

Why Canopy Wave H100 GPU Cloud

Why Canopy Wave

H100 GPU Cloud

Built for Unmatched Scale, Speed, and Reliability

High Throughput & Memory

Each H100 boasts 80GB HBM3 memory and 3.35 TB/s bandwidth, ideal for memory-hungry inference and model parallelism.

Training & Inference Optimized

Fourth-gen Tensor Cores enable FP8/FP16/TF32 precision, powered by a dedicated Transformer Engine for LLM efficiency.

SXM Superiority

HGX SXM5 H100 leverages NVLink and NVSwitch for superior multi-GPU interconnects—far surpassing PCIe variants.

VM Flexibility

Deploy 2-8 GPUs in minutes, with near-bare-metal performance and easy scaling to clusters.

Superior Performance Benchmarks vs.A100

Inference Acceleration

3.2 x faster

Delivers 1,979 TFLOPS in FP16 Tensor Core ops—3.2x faster than A100's 624 TFLOPS—for lightning-quick AI responses.

LLM Training Boost

30 x performance

Up to 30x A100 performance in Transformer-based models, streamlining generative AI development.

Bandwidth Edge

1.6 x memory bandwidth

3.35 TB/s memory bandwidth (1.6x A100's 2.039 TB/s) minimizes bottlenecks in massive datasets.

HPC Dominance

7 x speed

7x A100 speed in simulations and analytics, perfect for scientific computing and big data.

H100 GPU Cloud Pricing & Flexibility

Canopy Wave offers H100 GPU cloud instances on a pay-as-you-go model with per-minute billing and no long-term lock-in. Select single instances or preconfigured multi-GPU nodes (2x, 4x, 8x) and scale elastically for training bursts or sustained inference traffic.

On-Demand

$2.25/GPU/hour—Spin up 2-8 GPUs instantly

- •

On-Demand Access

Launch instantly, pause effortlessly—no commitments.

- •

Transparent Billing

Per-minute/hour rates, zero hidden fees, full control.

- •

Scalable Sizing

From solo GPUs to expansive clusters—match your budget and ambition.

Reserved

Enterprise custom—contact sales for tailored deals.

- •

Pricing

Lower Pricing, volume discounts available via sales.

- •

Configuration

Fixed nodes, IPs, topology; full system customization.

- •

Availability & Support

Guaranteed capacity; priority allocation & dedicated 24/7 support.

Enterprise Security & Unwavering 24/7 Support

Trust in robust protections and

expert assistance

Isolation Excellence: VPCs, private subnets, and dedicated hosts safeguard sensitive AI workloads.

Round-the-Clock Aid: 24/7 monitoring for H100 clusters, with priority resolution and deployment runbooks.

Case Study

What H100 GPU Cloud Excels At

Transform protein engineering and beyond with GPU-accelerated innovation.

Accelerating Protein Engineering

via Canopy Wave's GPUaaS

Questions and Answers

PCIe and H100 SXM?

and inference?

Ask a Question

Get Started

Launch your H100 cluster in minutes, or contact us to reserve a long term contract