On-Demand

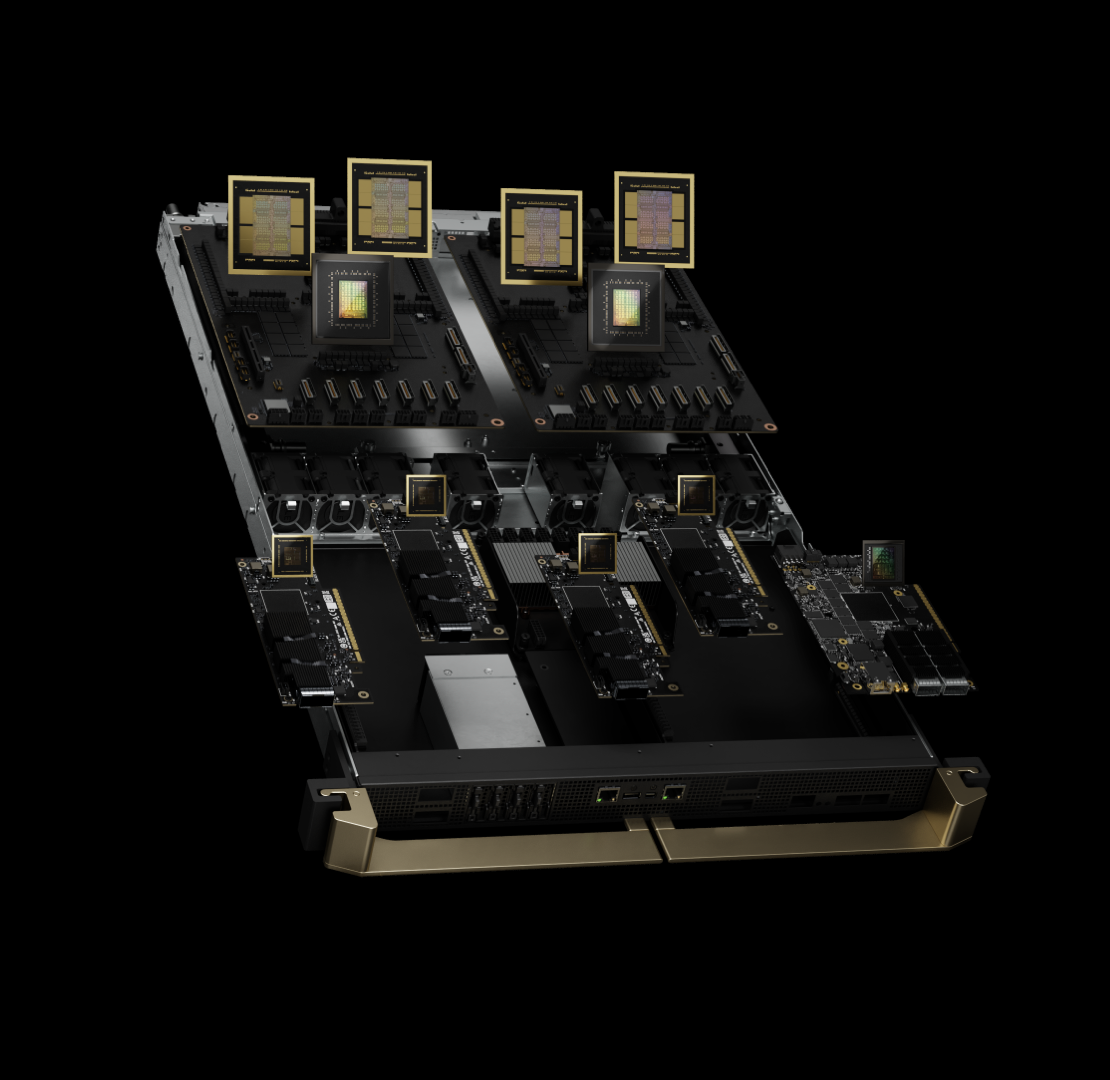

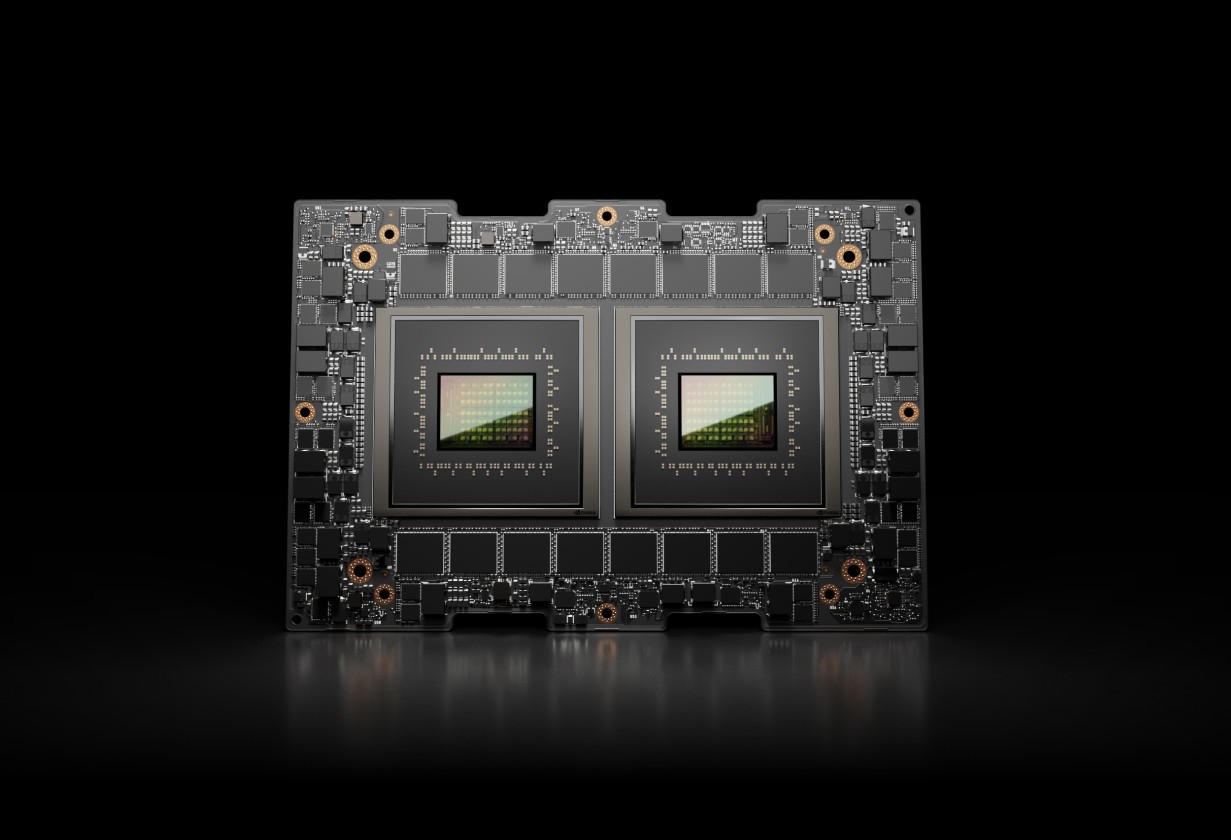

NVIDIA GB200

NVL72

No matter the task — AI training, data crunching, or heavy graphics — GB200 brings unmatched performance to your workflow

Canopy Wave On-Demand Flexibility

Access high-performance computing resources anytime through, anywhere with GB200's on-demand service. Scale GPU resources instantly to meet your workload requirements—no long-term commitments or upfront costs required

Flexible Resource Customization

Dynamically adjust GPU configurations and scale from single-GPU to multi-GPU clusters based on your business needs

Pay Only for at You Use

Per-minute billing with no upfront fees or long-term contracts, optimizing your computing costs

Rapid Deployment and Instant Launch

Deploy environments quickly and launch instances within minutes, accelerating your AI training and inference workflows

Why NVIDIA GB200 NVL72 on

GPU Cluster Hardware Product Portfolio Clusters?

The Security of Private Cloud

Generate, add, delete or change your SSH or API keys. Set different security groups and how to work with your team

24/7 Support

24/7 hours of continuous online duty, with zero time lag in demand response; Real-time interactive support, problem resolution without overnight delay

Visibility Platform

The Canopy Wave DCIM Platform gives full visibility into your AI cluster—monitor resource use, system health, and uptime in real time via a central dashboard.

Aiming to Promote

Next-Generation

AI Acceleration

It has demonstrated outstanding performance support in large language model (LLM) inference, retrieval-enhanced generation (RAG), and data processing tasks

Faster LLM inference

VS

HGX H100 Tensor Core GPU

Faster LLM training

VS

HGX H100

More energy efficient

VS

HGX H100

Powering the New Era of Computing

Next-Level LLM Inference and Training

- • GB200 NVL72 uses a second-generation Transformer Engine with FP4/FP8 precision and fifth-gen NVLink

- • Powered by next-gen Tensor Cores with microscaling formats for higher accuracy and throughput

- • Enables 4x faster LLM training at scale

Revolutionary Energy Efficiency

- • FP4 quantization reduces inference energy to 4% of H100

- • Liquid cooling increases compute density and cuts power use

- • Delivers 25× performance at the same power budget

Accelerated Data Processing

- • Blackwell architecture combines HBM, NVLink-C2C, and decompression engines

- • Speeds up enterprise database queries by 18× compared to CPU

- • Achieves 5x better total cost of ownership

Ready to get started?

or contact us to reserve a long term contract