NVLink 5.0 is a Game-Changer for AI Agent Development

Introduction

The evolution of Artificial Intelligence is entering a new, transformative phase, moving from standalone models that respond to prompts to sophisticated AI Agents that autonomously perceive, reason, act, and learn. These agents promise to revolutionize everything from software development and customer service to scientific discovery. However, their path to ubiquity is blocked by a formidable bottleneck: not just raw compute power, but the crippling communication bottleneck that emerges when an agent's "brain" is distributed across multiple powerful GPUs.

The launch of NVIDIA's Blackwell architecture, spearheaded by the revolutionary B200 GPU, and its groundbreaking NVLink 5.0 technology isn't merely an incremental upgrade; it is the critical enabler that will unlock the true potential of AI Agents. This article explores how the B200, powered by NVLink 5.0, is dismantling previous limitations and acting as the catalyst for a new era of intelligent, autonomous systems.

The Communication Bottleneck: The Invisible Wall Holding AI Agents Back

To understand why the B200 and NVLink 5.0 are so pivotal, one must first understand the nature of a sophisticated AI Agent. Unlike a model that generates an image or answers a question, an agent operates in a complex, iterative loop:

1. Perception: Receiving multimodal input from its environment (e.g., a user's vocal command, real-time sensor data, a massive codebase).

2. Planning & Reasoning: Decomposing a high-level goal into an intricate sequence of actionable steps. This involves consulting its internal knowledge, making logical inferences, evaluating options, and predicting outcomes.

3. Action: Executing steps by calling a diverse set of tools or APIs (e.g., executing a code function, querying a database, booking a flight, controlling a robotic arm).

4. Learning: Refining its internal model and future approach based on the success or failure of its actions, often through reinforcement learning.

This workflow is not a single, monolithic computation. It is a dynamic, stateful process involving rapid, fine-grained, and constant communication between different specialized components of a massive model. In previous architectures, when these components were spread across multiple GPUs—a necessity for tackling complex problems—the interconnects became a severe traffic jam.

High Latency between GPUs meant the agent's "thought process" was constantly stalled, waiting for critical data to be synchronized across its neural network. This resulted in sluggish response times, making real-time interaction impossible.

Limited Bandwidth restricted the volume of contextual information that could be shared, effectively forcing the agent to work with a fragmented and incomplete picture of its task. It was like trying to solve a jigsaw puzzle while only being allowed to see a few pieces at a time.

As a result, agents were hampered in their ability to tackle complex, multi-step problems with any semblance of human-like fluency. They possessed intelligence but were forced to think in slow motion. Developing more capable agents was, therefore, not just a software and algorithmic challenge; it was a fundamental hardware infrastructure problem. The dream of truly autonomous agents was waiting for a hardware revolution.

Blackwell's B200 and NVLink 5.0: Building a Unified "Agent Brain"

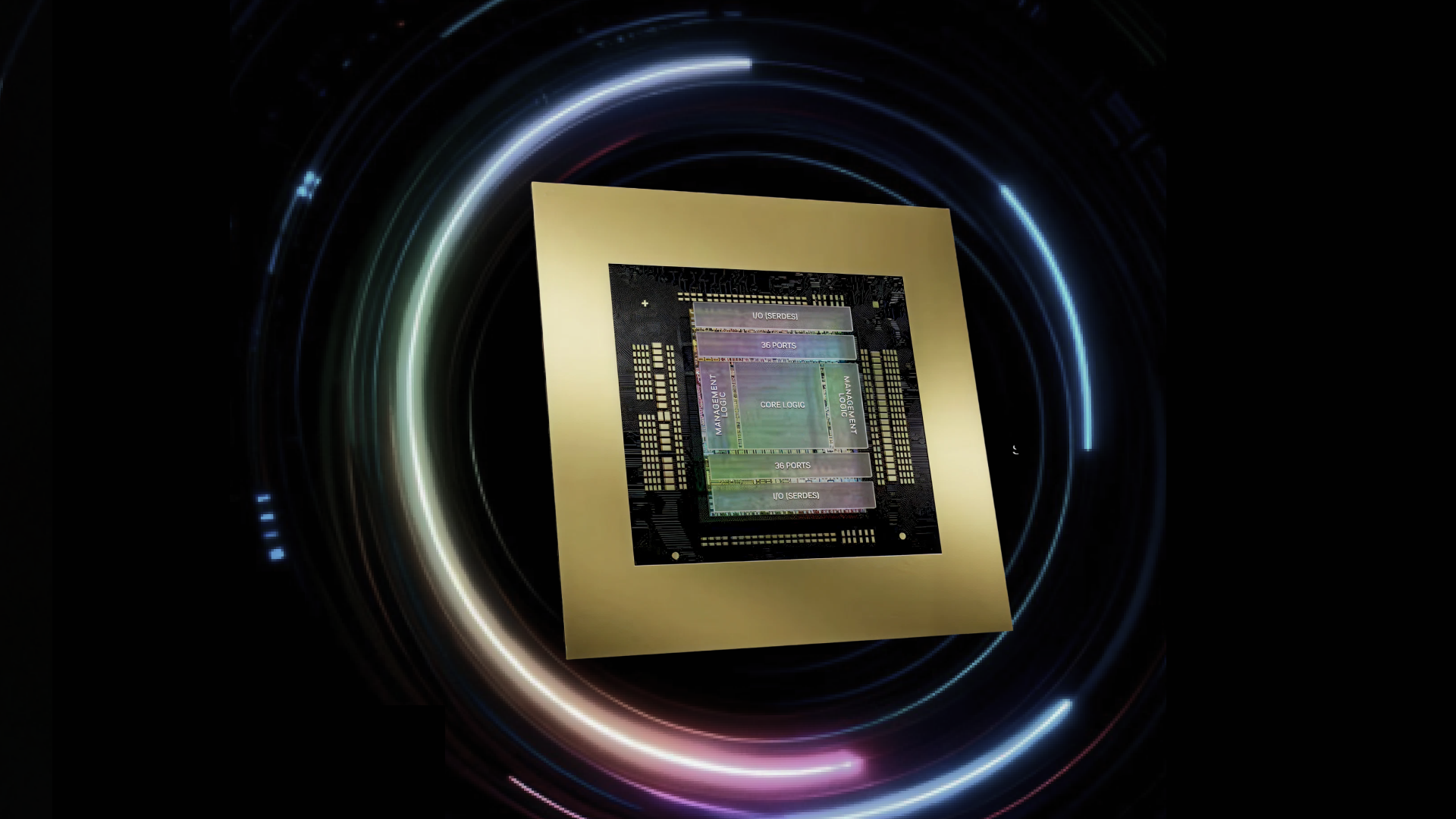

The B200 GPU is the physical embodiment of the Blackwell architecture and addresses this core impediment head-on. NVLink 5.0 is not a simple speed boost; it is a reimagining of how GPUs communicate, designed from the ground up for the age of agency and symbiotic collaboration. NVLink 5.0 serves as the core interconnect technology of the NVIDIA Blackwell architecture. As the flagship product of this architecture, the B200 GPU deeply integrates and optimizes NVLink 5.0 capabilities, while this technology is also applicable to other GPU products under the Blackwell architecture.

1. Unprecedented Bandwidth: Fueling Real-Time Reasoning and Context Exchange

The B200 GPU leverages NVLink 5.0 to deliver a staggering 1.8 TB/s of bidirectional bandwidth between GPUs. This is a 2x increase over the previous generation (Hopper H100) and orders of magnitude faster than standard networking protocols. This raw speed is the lifeblood of a complex AI Agent.

What this means for AI Agents: The agent's entire operational state—its long-term context window, its evolving plan, its intermediate calculations, and the results from its tool calls—can be shared between B200 GPUs almost instantaneously. This allows the different specialized parts of a massive model (e.g., a reasoning module, a tool-use module, and a memory module) to operate in perfect, tight concert, as if they were a single, unified brain. There is no longer a performance penalty for distributing complex cognitive workloads. The agent can maintain a vast, rich context of its entire interaction history and current environment, which is essential for solving intricate problems.

2. Seamless Scalability and Unified Memory: The Foundation for Giant Agents

What this means for AI Agents: Developers are no longer constrained by the memory limits of a single GPU. They can now create and deploy single, massive agents on clusters of B200s that were previously unimaginable. Imagine an agent that can hold an entire corporation's codebase, all its internal documentation, and real-time operational data in its context to solve a complex business problem—this is the scale enabled by the B200's NVLink 5.0 unified memory. The NVLink 5.0 technology in the B200 GPU, with its high-bandwidth, low-latency GPU interconnect capabilities, lays a critical foundation for building distributed hardware clusters capable of supporting trillion-parameter models. Realizing such massive-scale models also relies on the B200's own high-performance compute cores, large-capacity VRAM, and comprehensive hardware-software co-optimization—including model parallelism algorithms and efficient distributed training frameworks such as Megatron-LM.

3. The B200's Computational Muscle

It is crucial to note that the B200 GPU itself provides the immense computational power required to execute these complex agent models. Its advanced transformer engine and massive core count are designed to handle the intense computational demands of planning, reasoning, and tool-calling simultaneously with low-latency communication. The B200 isn't just about talking fast; it's about thinking deeply and acting decisively at an unprecedented scale.

The Future, Powered by B200: Use Cases Reimagined

The implications of the B200's leap in performance and interconnect technology extend across every industry poised to adopt AI Agents:

Scientific Research Agents: An agent running on a B200 cluster can autonomously control a complex lab instrument, process the streaming experimental data on one set of GPU cores, simultaneously run molecular simulations to interpret the results on another, use the findings to adjust the experiment parameters, and then decide on the next hypothesis to test—all in a continuous, real-time loop without latency-induced delays. This closed-loop autonomy could compress years of research into months.

Software Engineering Agents: Instead of just suggesting a line of code, a fully-realized agent powered by the B200 could comprehend an entire monolithic codebase, plan a major refactoring or feature implementation, execute the changes by writing and testing code across hundreds of files, run validation suites, and deploy the final product—a process requiring constant, deep communication and state sharing across its entire neural network, enabled by NVLink 5.0.

Autonomous Systems: In robotics and autonomous vehicles, agents must make millisecond-level decisions based on a fusion of video, lidar, and radar data. The B200's NVLink 5.0 enables the sub-models processing these different sensor streams to merge their understanding instantaneously, creating a richer, safer, and more accurate world model for the agent to act upon. The low latency ensures that actions—like obstacle avoidance—are executed without deadly hesitation.

Conclusion: The Symbiotic Path Forward with Canopy Wave

The narrative of AI progress is often centered exclusively on algorithms, data, and model sizes. However, NVIDIA's Blackwell architecture, its flagship B200 GPU, and NVLink 5.0 technology make a compelling case that the most profound breakthroughs occur at the intersection of hardware and software innovation. By solving the fundamental communication and computation challenges that have constrained distributed AI, the B200 has provided the necessary physical infrastructure for AI Agents to evolve from promising prototypes and narrow tools into reliable, powerful, and truly transformative partners.

This hardware revolution creates a symbiotic relationship: more advanced hardware like the B200 enables more intelligent agents, and the demands of those more intelligent agents drive the next generation of hardware innovation. It is a virtuous cycle that is accelerating the pace of progress exponentially.

At Canopy Wave, we are at the forefront of this infrastructure revolution. Our expertise in designing, building, and optimizing next-generation GPU clusters based on the NVIDIA B200 GPU ensures that your organization gains not only raw computational power but also a seamlessly interconnected "Agent Brain." We provide the foundational platform that enables developers and enterprises to build, train, and deploy the next generation of AI agents—capable of reasoning, acting, and learning without constraints—unlocking new frontiers of productivity and discovery.

The future of AI is autonomous, contextual, and interactive. The path to that future is built on the NVIDIA B200 and interconnected by the lightning-fast, seamless communication of NVLink 5.0.